Security for the 2020s: Addressing the Engineering problem

Security for the 2020s: Addressing the Engineering problem

“When you ask an engineer to make your boat go faster, you get the trade-space.You can get a bigger engine but give up some space in the bunk next to the engine room. You can change the hull shape, but that will affect your draw. You can give up some weight, but that will affect your stability. When you ask an engineer to make your system more secure, they put out a pad and pencil and start making lists of bolt-on technology, then they tell you how much it is going to cost.” Prof Barry Horowitz, UVA

“Human error is a symptom of a system that needs to be redesigned” Nancy Leveson, MIT

This piece will be referencing numerous other resources as I’m honestly excited about some of the work some great people have been doing in the past decade, though it feels to me that the InfoSec industry is yet to catch up or fully grasp it’s significance.

There numerous ways of framing this problem, so I decided to focus on the few that in my biased-opinion (because everyone’s biased) have either greater relevance or future potential.

The field of Resilience Engineering is getting ever more mature, and I particularly like the work that John Allspaw has been doing in that space in terms of the discipline itself which Cyber Security needs to adopt and adapt and that Jabe Bloom approaches from a Whole Work and Sociotechnical practice perspective which will be key for adoption.

I also particularly like its application to Cyber Security, as portrayed by Sounil Yu in his D.I.E. model and which Kelly Shortridge (from Capsule8 at time of writing) and Nicole Forsgren discussed at Blackhat 2019 which adds more practical advice into its implementation.

Finally, and though it is a reference and consideration from John Allspaw I believe there’s more value to derive to InfoSec from considering approaches based on Nancy Leveson’s “Engineering a Safer and More Secure World” and subsequent related security practices which emerged relating to cyber-physical systems like STPA-SafeSec, though I believe there are still some technical challenges to overcome which I’ll express later.

Resilience Engineering

I absolutely love the work that John Allspaw is doing and how he presents it too, so I won’t even try to summarise or provide my take on his content but instead point you to a lis of his resources. My favourite of his recent talks is “Resilience Engineering: The What and the How” and in the link you can find video, slides and transcript.

For context, I’ll just mention his section on what Resilience Engineering is as it provides a discussion point for what will come after in this article:

On talking about what Resilience is, he says:

“It’s something a system (your organisation, not your software) does, not what it has.

Resilience is sustained adaptive capacity, or continuous adaptability to unforeseen situations”

In adapting these concepts of Resilience Engineering to Software Engineering, it’s clear that it’s all still in its infancy as a field. John Allspaw himself focuses a lot on learning from incidents and near-misses, whilst other authors have somewhat ‘equated’ Resilience Engineering to the practice of Chaos Engineering. I suspect both are key to it, but even more so is the people side of it and implications to daily work where Jabe Bloom sociotechnicity practice of DevOps is the fundamental piece (ie how people interact to make the goal of building resilient systems achievable, and this includes everyone from Knowledge Workers all up to Executive teams as it has implications to all)

With the creation of new conferences like REDeploy and more tools being released to implement related practices like ChaosMonkey from Netflix and Chaos Toolkit it looks like the direction of travel for many organisations.

I would highly recommend everyone to read and see the videos of the various links I posted in this section for a true introduction and better view into what it is and what it isn’t.

Time to D.I.E

This is a reference to Kelly Shortridge’s sentence and slides and Sounil Yu D.I.E model

Again, I’d highly recommend to view Sounil Yu podcast on the subject and also his slides

For us, security people I believe the D.I.E (Distributed, Immutable, Ephemeral) model paradigm in “opposition” (I’m unsure this is the best word but I do think it’s the closest) with the C.I.A triad.

The C.I.A. triad is still to this day, touted as the way to do security work. All our literature as a field references, and anyone taking any type of courses relating to Cyber security will be indoctrinated with it. But is that going to help us address the threats of the next decade ?

As we move to a different type of good and best practices relating to how we develop and operate our software, we’re now at a point where a focus on C.I.A is likely to be an impediment to security more than it is an assurance of it.

IT patterns using technologies such as Content Distributed Networks, Copy-on-write, Cloud Providers, Containerisation and Orchestration, Serverless architectures and even Blockchain and others are fundamentally changing the value provided by that old paradigm.

As Sounil Yu says “More than helping with security, these new patterns can eliminate the need for security at all”.

Putting it in a different way (and I appreciate the nuances in these statements, though I’m bound to be called on it which is good :)):

- If my system is highly distributed and can self heal, why do I need to focus on single system availability ?

- If all I do and develop is under version control and declarative in nature (thus immutable), why should I worry about integrity ?

- If all my systems are now short lived and not allowing for any persistence and I adopt with it data engineering methods which decrease interactions with systems of record (ephemeral), why do I need to worry about its confidentiality ? (I appreciate this one is harder to grasp, but it’s also a reference to data design patterns which make use of privacy preserving techniques like homomorphic encryption, multi party computing and and differential privacy which can find more about in the brilliant UN handbook for Privacy-preserving techniques)

“It turns out, if we leverage these three paradigms of DIE, we might not need to worry about CIA” Sounil Yu

Kelly Shortridge and Nicole Forsgren talk really brings these concepts to a more easily graspable level

“Chaos and resilience is Infosec’s future. Therefore Infosec and DevOps priorities actually align” Kelly Shortridge

And relating back to the immutable vs integrity, is my favourite quote of their talk:

“Lack of control is scary, but unlimited lives are better than nightmare mode”.

Persistence from attackers is equated to nightmare, but if we treat the our systems like cattle (disposable) and not pets (something we treat and cherish) then.

The last 1/3 of Kelly/Nicole’s talk goes into details on how practical ways to adopt D.I.E principles into your environment from a security perspective and ways to frame these challenges with your DevOps to ensure goal alignment.

One of the biggest insights and a personal bug bear of mine for many years that Kelly also mentions is that “DevOps team probably already collecting the data you need”.In Infosec industry, we desperately need to equate security and visibility to our security toys and aim to leverage and squeeze the most security value out of what our DevOps teams already have.

Following these ideas from Kelly and Nicole is the key to making InfoSec relevant into the 2020s and the work to do as DevOps teams take more ownership for their security as another element of quality assurance.

Safety Engineering

A brilliant introduction to Safety Engineering by Nancy Leveson can be found here and from slide 56 even includes references to Stuxnet, and the learnings we can take for Infosec never cease to amaze me.

We’re all still too much in the domain of considering the users “dumb”, and not focusing on Enforcing safety and security constraints on system behaviour, which I believe will be the next frontier for security work.

There are 2 further pieces of work which I believe are highly relevant in applying these concepts to Cyber security, and what these authors refer to as cyber-physical systems)

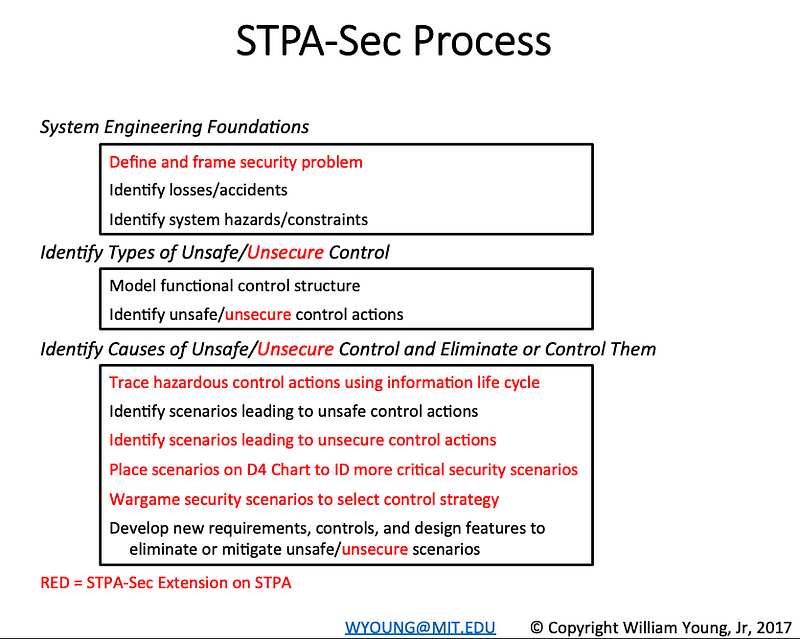

One of them has been entitled STPA-Sec as a Security extension to STPA (you can find great reference material here).

As Kelly also mentions Game days/Wargaming are critical to these activities, and something that as an Industry we need to get better and standardise methods of approaching it. I particularly like Dr. Thomas’s adaptation of ‘Hazardous Control Actions’ to Cyber Security

It makes us question what’s the risk of not implementing a control. what can happen if we do implement it, what happens if it’s triggered at the wrong time or in the wrong order or if it’s stopped too soon or applied for too long ? This is a brilliant way of thinking about the complexity of the controls we recommend and implement and consider the failure modes so we have a fighting chance to prevent complex failures from happening.

I think generally the practice of Threat Modelling doesn’t consider these in detail, and the STRIDE mnemonic is certainly insufficient for this space and considered levels of complexity. So maybe we need a new mnemonic to deal with the implementation of controls themselves PNTS (Provide, Not provide, Timing and Stopped)perhaps ? I’m not good at these, I don’t think 🙂

Finally, the “latest” extension of this is called STPA-SafeSec (which I hate the name by the way) can be found here and provides a very interesting approach (albeit a bit too academic for general use for now, in my opinion) and defines a taxonomy of constraints ad components which can be very useful from a Threat modelling perspective too.

A challenge with all these approaches is the lack of standard actuators and sensors which make it difficult for centralised assessment of failure scenarios, but with wider adoption of Cloud Native capabilities this is becoming less of an issue and I guess just makes it more important to planning for ditching most of your security vendors anyway, as they’re unlikely to have a big role to play into the 2020s (I know, heresy).

Conclusion and what I didn’t say

As a former security engineer, I’m honestly excited about the 2020s from an Engineering perspective. Good secure design no longer means bolting-on security tech on top of existing operational systems but the nature of the systems we’re building make them inherently more secure (though obviously we still have some road to travel particularly in wider adoption of privacy preserving techniques).

As Infosec, we need to find our role in this new world where design patterns and use of Cloud native capabilities which are often updated without our intervention or business impact and I believe this role will be to help provide rationale for prioritisation of backlog items in the DevOps roadmap, introducing shared practices into DevOps such as Threat modelling and collaborate more effectively and work with the teams to get the data we need to support Compliance and Risk Management within their own tools and datasets.

What I didn’t say in any of this is mention any security technologies or next gen AI as that should not the basis of your strategy. If it is, you’re into buzzword adoption and seriously outsourcing the hard work of thinking to an unproven piece of tech and I cannot advise that as a strategy.

What this means in practice is that Infosec MUST embrace DevOps transformation and actually be the cheering supporters of it in the organisation, as opposed to what I still see as Infosec trying to undermine and slow down its rate of adoption.

Our organisations are so much better off with these new resilient design patterns than what we can realistically achieve with a focus on CIA, that it’s extremely disappointing for me to still see such pushbacks.

Let’s spend more energy and effort advocating on how to do DevOps securely (DevSecOps if you wish to call it that) and convincing other Infosec people of all the great benefits they can have in this new world that is coming, wether they want it or not as it’s now a matter of business agility and even survival.

References:

Jabe Bloom, Whole Work and Sociotechnity and DevOps https://www.youtube.com/watch?v=WtfncGAeXWU

John Allspaw, Resilience Engineering: THe What and the How https://devopsdays.org/events/2019-washington-dc/program/john-allspaw/

Sounil Yu, New Paradigms for the Next Era of Security https://www.youtube.com/watch?v=LTT9xqUs7S8

Kelly Shortridge and Nicole Forsgren, Controlled Chaos — The Inevitable Marriage of DevOps and Security https://swagitda.com/speaking/us-19-Shortridge-Forsgren-Controlled-Chaos-the-Inevitable-Marriage-of-DevOps-and-Security.pdf

Nancy Leveson, Engineering a Safer and More Secure World http://sunnyday.mit.edu/workshop2019/STAMP-Intro2019.pdf

William Young Jr and Reed Porada, System-Theoretic Process Analysis for Security (STPA-Sec) https://psas.scripts.mit.edu/home/wp-content/uploads/2017/04/STAMP_2017_STPA_SEC_TUTORIAL_as-presented.pdf

Ivo Fredberg et al, STPA-SafeSec: Safety and Security analysis for Cyber-physical systems, https://www.sciencedirect.com/science/article/pii/S2214212616300850