The missing dimension of security process development: protecting our employees

A quick post today on something that’s been on my mind lately. I made a quick Twitter post about it here, but will expand a bit on this blog post

If you’re not following me on Twitter, you probably should. It’s where I’m most active on social media, don’t really enjoy the dynamics of the other social media websites.

Back to the topic at hand…

I think we should all be able to agree that when we do the work of translating policy statements into standards and processes, we typically have one main goal in mind.

We’re trying to protect our organisations from liabilities relating to the activity we are creating the documents for, sometimes balancing that goal with concerns on business profitability. We’re trying to ensure that the process provides adequate protection at a price point (both the technology itself used, if that’s the case, but also in terms of operational effort to enact). This is often complemented with some general concerns which are more related to Data Protection as a discipline, which is doing right by our clients and trying to ensure we’re protecting from harms that may arise from their use of our products and services, but even this is often a secondary consideration that we leave it to someone else to worry about (our Data Protection colleagues)

Now, in practice we know (or should know) that these processes are often created in a vacuum, written by people who never actually did the work they’re trying to constrain and I’d argue that even when we do bring those affected as collaborators in co-creating these processes that two things also tend to be true:

- sociotechnical work is always underspecified – no matter how well we create these processes, they will never be able to account all of the nuances and work variability that happens in the real world. This is not just a “security thing”. this is also true of most engineering and operational processes that our engineering/ops teams create and manage

- security activities tend to exist in a time-sharing mode – we know from work by Rasmussen in safety, that the world our processes assumes doesn’t actually exist in the real world. An example is for instance your static analysis security process. We write it as something that is discreet and well-bounded when in reality, it’s just another piece of feedback from CI/CD systems which is time-sharing with the outputs of unit testing, integration testing, linting and other things that CI/CD systems usually provide as feedback. this is the difference between “the work of security” and “the security of work”. Many of our security processes assume the former when the real world is about the latter

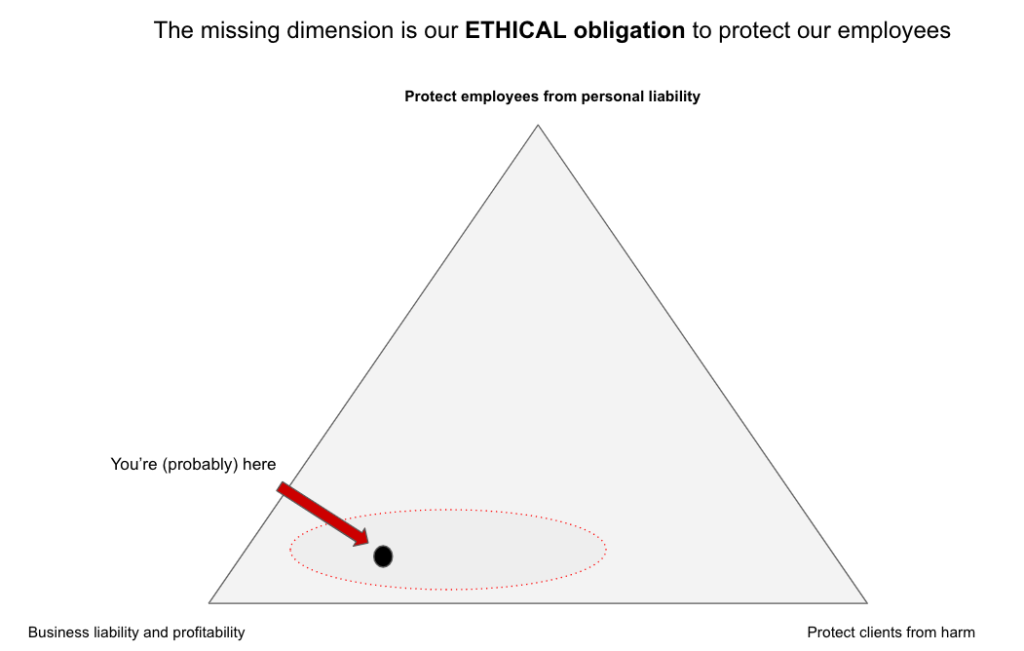

These two realities of living in a complex world, in my opinion, raise or should raise a 3rd dimension into how we think about the issue and role of security process development (the two dimensions being business liability and profitability and also protecting clients from harm).

What I’d like to argue for, is that given the two things I just raised on underspecification and time-sharing, that a much needed dimension we need to have (as GRC) is to consider that we have an ETHICAL obligation to protect our employees from personal liabilities relating to the security processes we create. We only need to look at any data breach reports by data protection authorities to see a very common theme in all of them, which is the sentence that is almost verbatim copy of “the organisation wasn’t following its own policies and procedures). And we can go straight to a facile explanation that it’s a “security culture problem” or that “engineers don’t care about security” but that’s just, in my opinion, redirecting the role we played in creating policies and procedures which aren’t FIT for the operational environment we wish to affect. I’ve written before on why our security policies are business liabilities and what we should do about it, that is both relevant and would recommend you read as well.

Going back to my earlier example, that means that if we have processes which relate to actioning feedback from CI/CD systems, the name of the game is “attention management” and if you don’t know how much feedback they get bombarded with their own functional needs, then we can only do a poor job at helping them manage these goal conflicts and make assumptions about our processes that are setting them up to fail.

This is my one wish for our industry, from a GRC perspective, that we start considering our ETHICAL responsibility towards our employees of ensuring that the things we write down in policies, standards and processes actually have a fighting chance of surviving real scrutiny and assurance and that we consider what they tell us are the goal conflicts and challenges they reconcile and adapt our approaches acccordingly.

Hoping you all help me with that 🙂